Final Presentation:

This third and final assignment was a collaboration between other students in my studio section. As a culmination of our work in understanding semantic spaces, training neural networks, and designing objects guided by them, we were now tasked with creating an architecture for a linear exhibit. My contributions and further descriptions of each can be seen below.

Group Members:

Leon Carranza

Vanessa Yang

Rae Chen

Rohan Sampat

Research

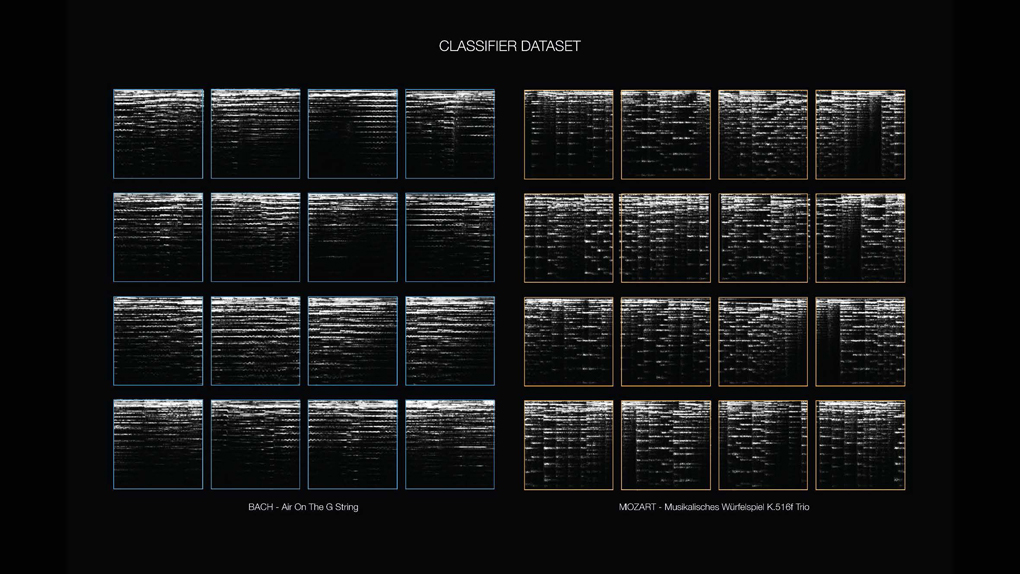

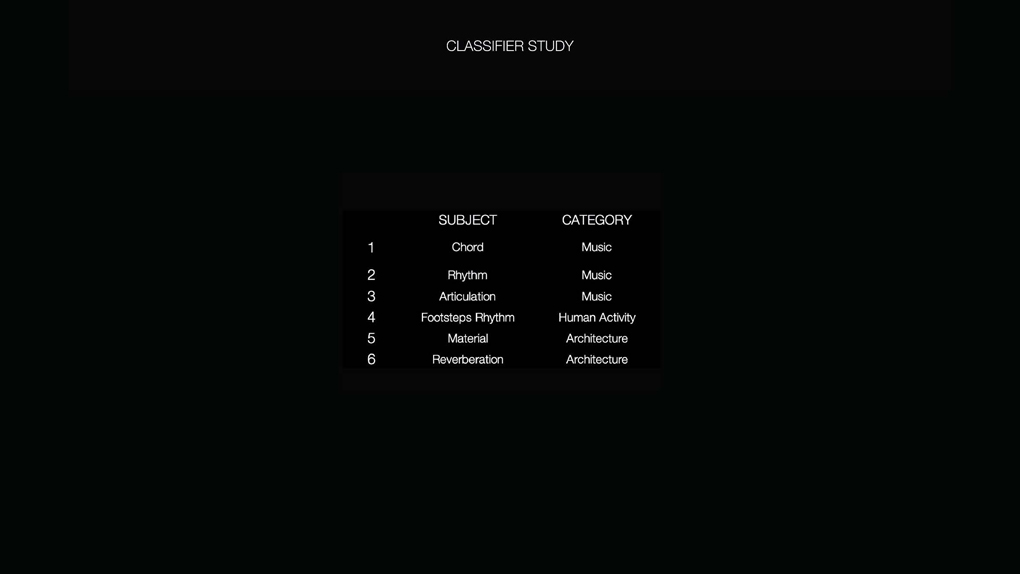

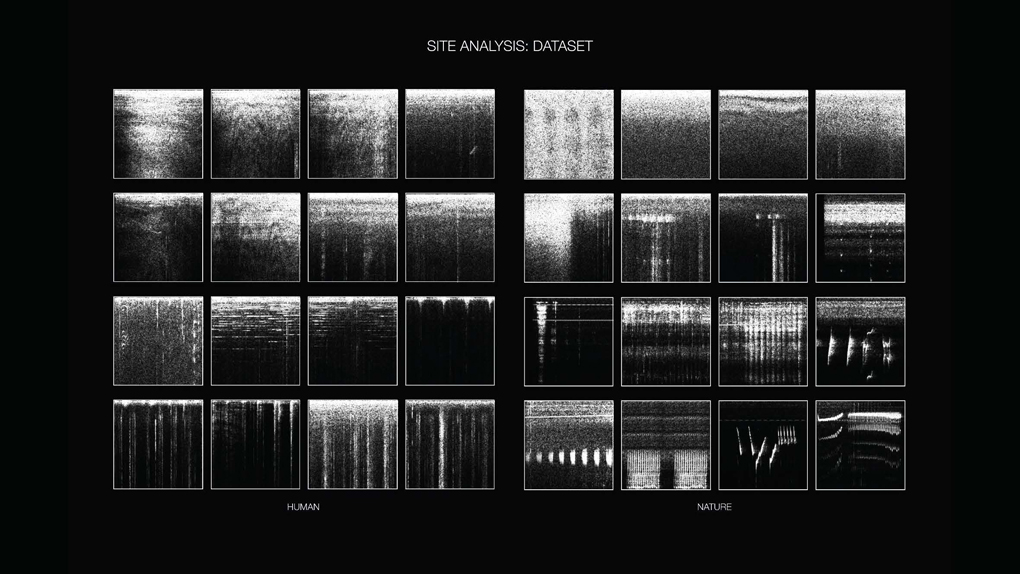

This is the intial research that would build our perceptual model. This involved initially training the model on short 2-3 second spectrograms of each musical piece.

Extracting Spectrograms from Two Artists

Moving Between Polarities

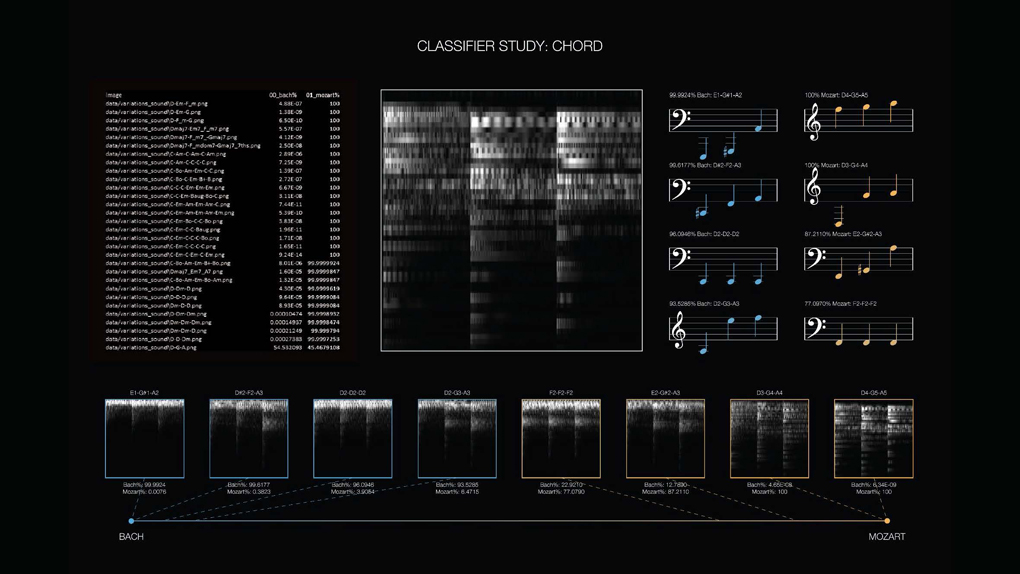

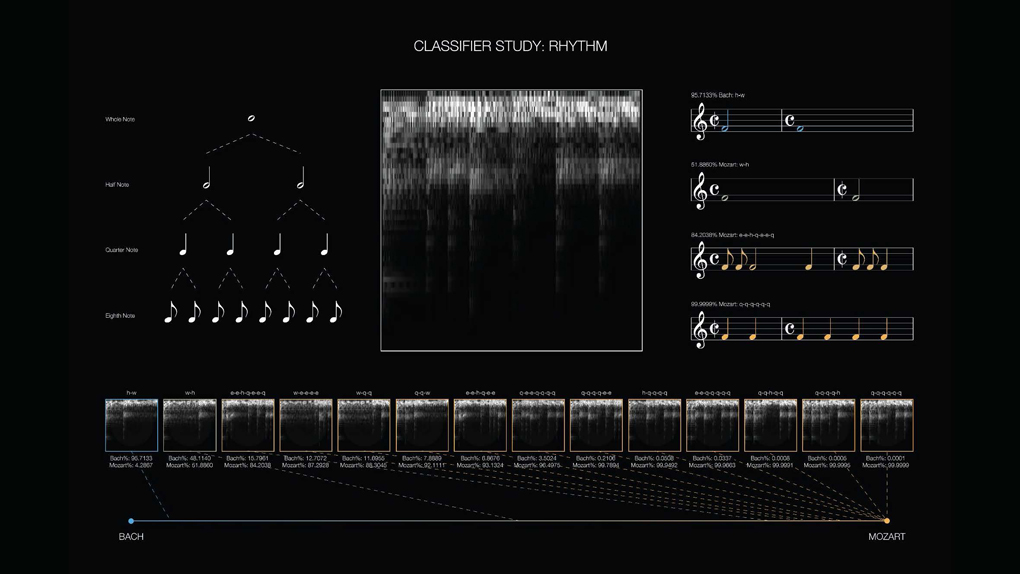

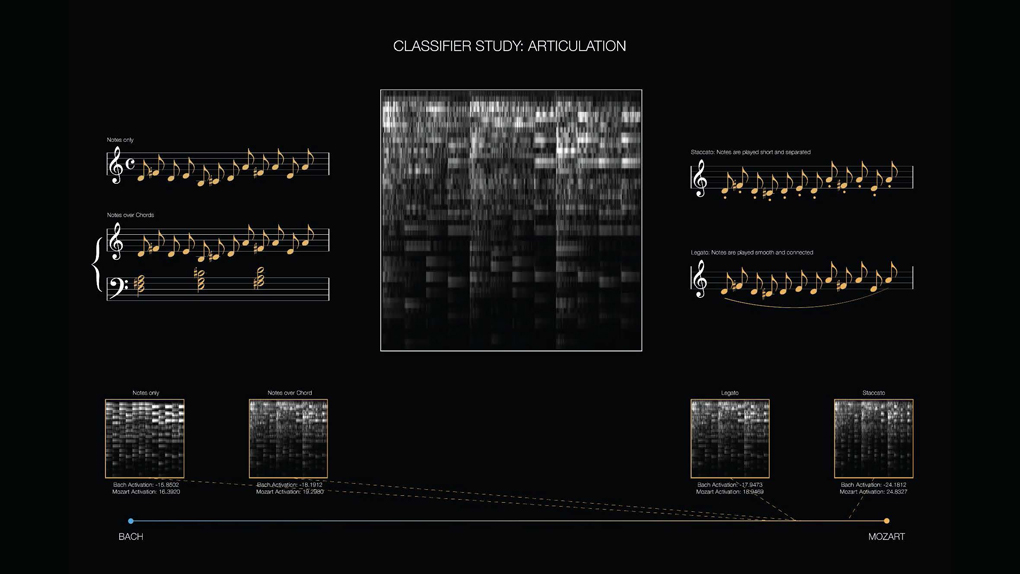

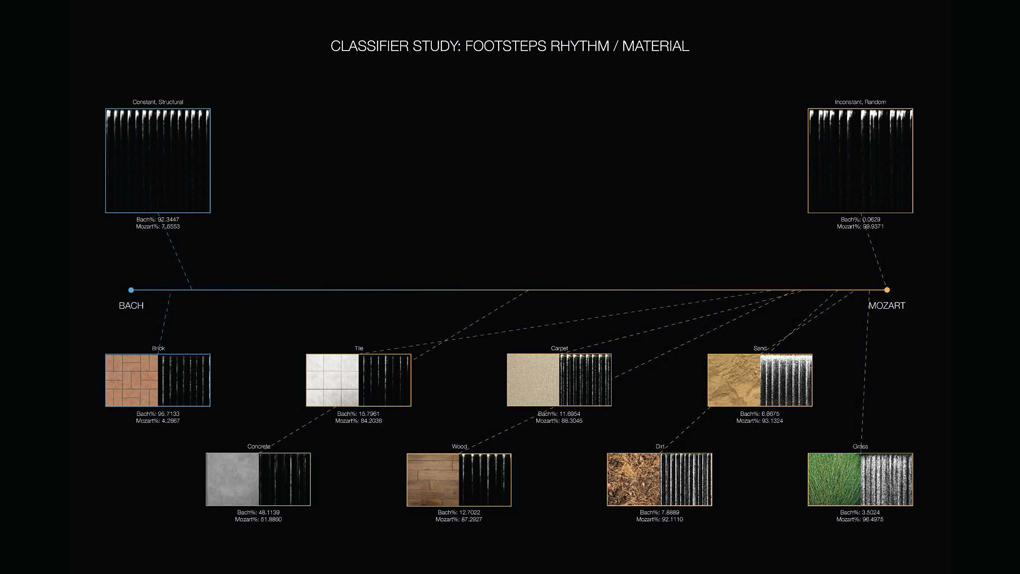

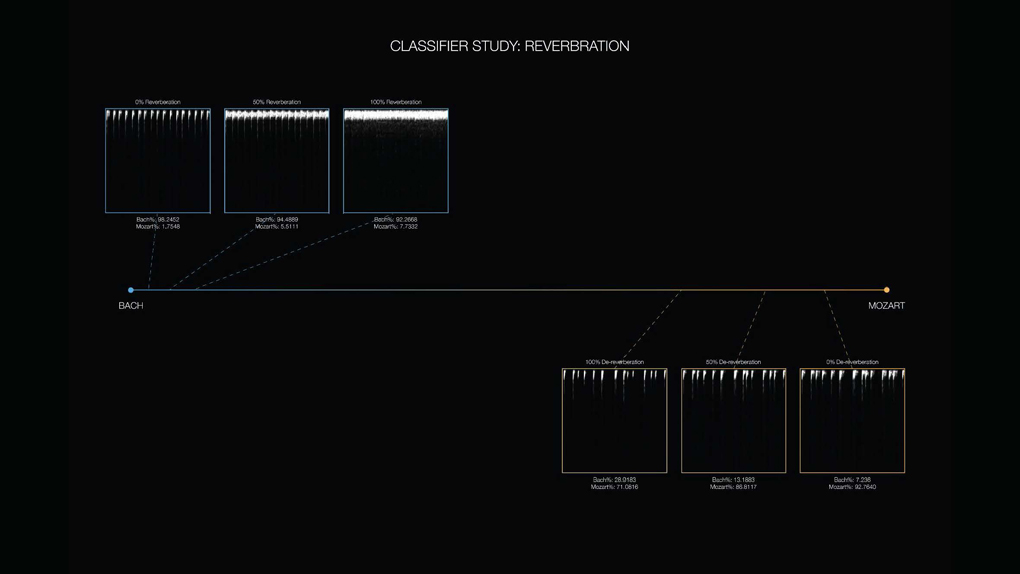

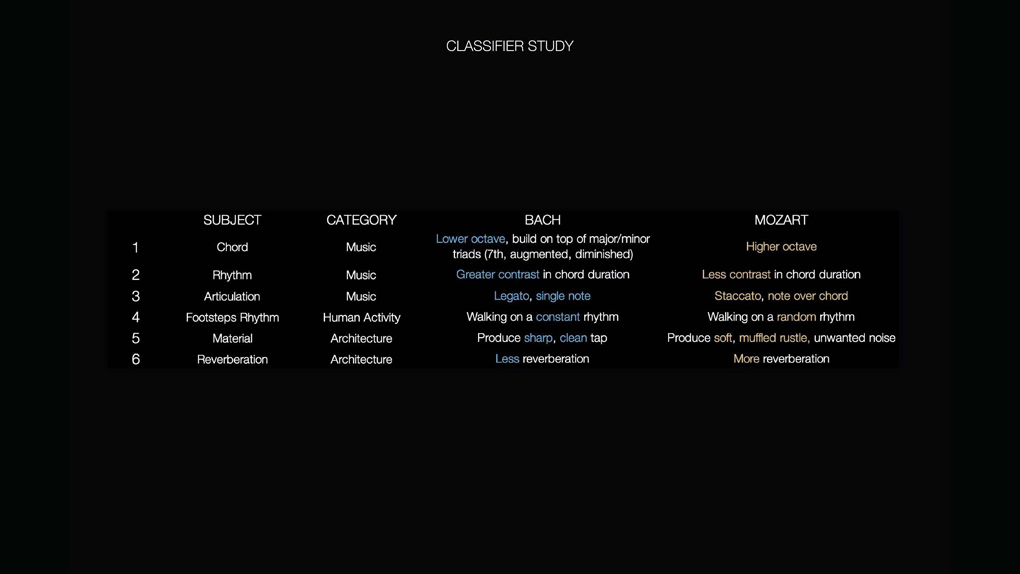

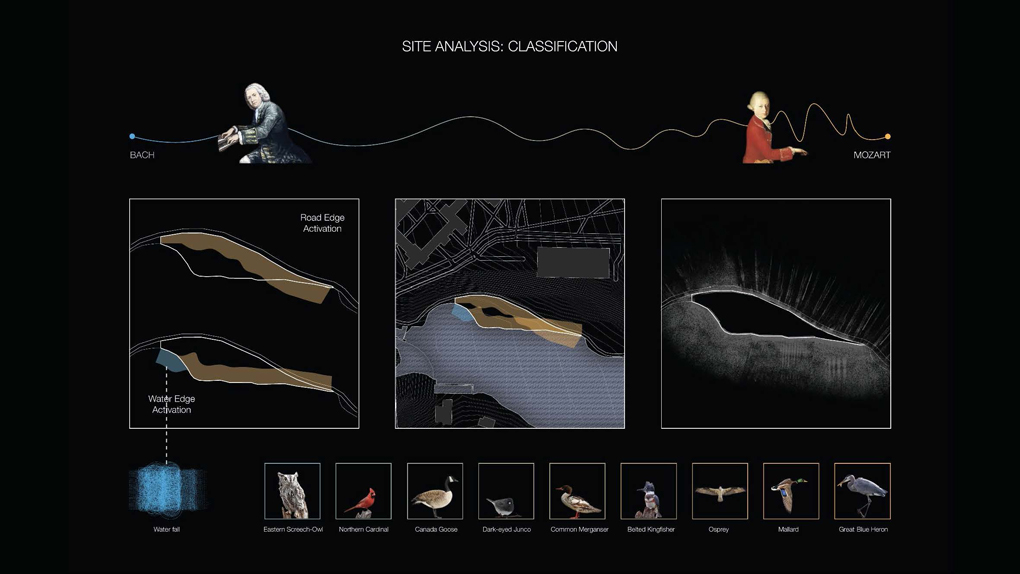

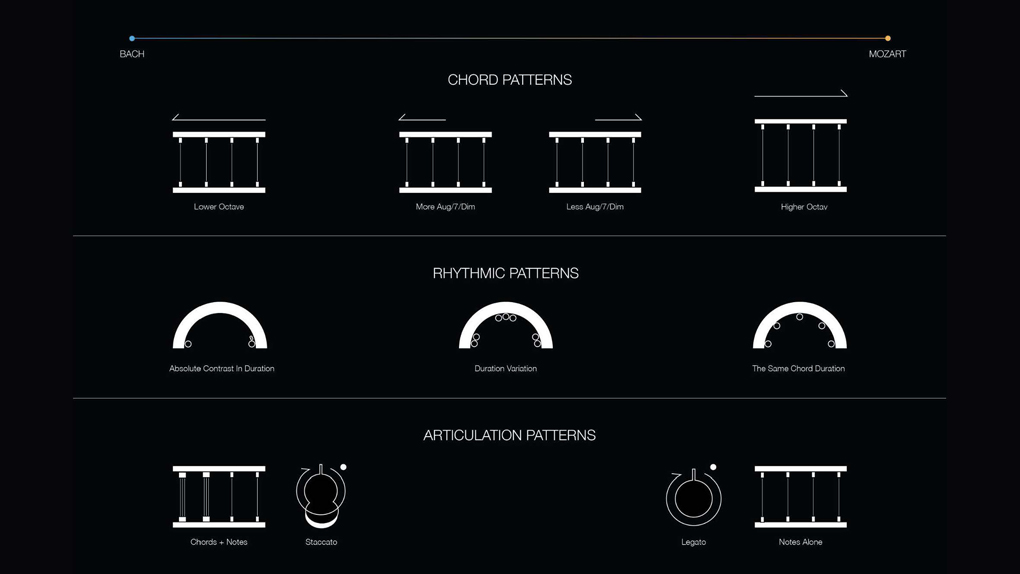

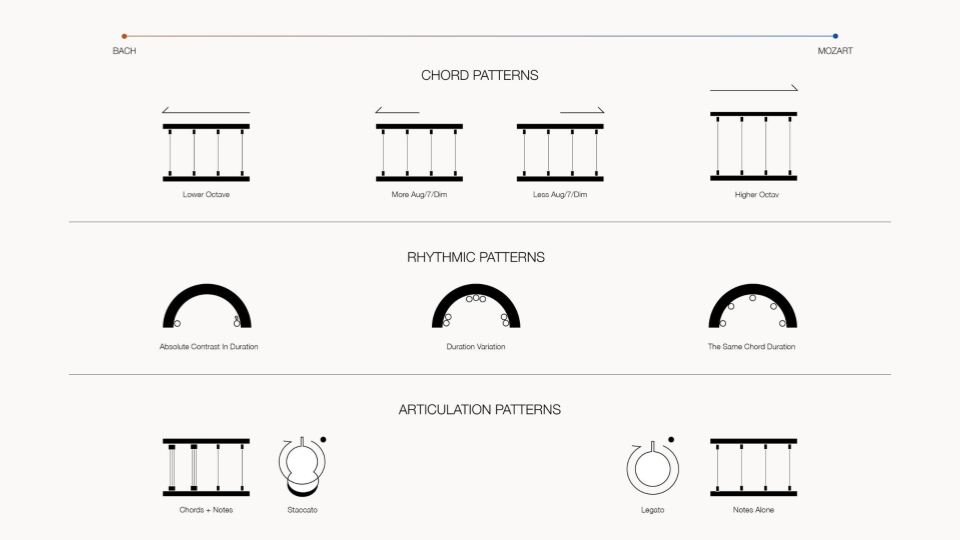

Through a digital audio workstation, I then created short sound clips with distinct sound qualities such as chord patterns, chord shapes (both found in classical music and those more common to other genres), staccato and legato, overall frequencies, and octaves.

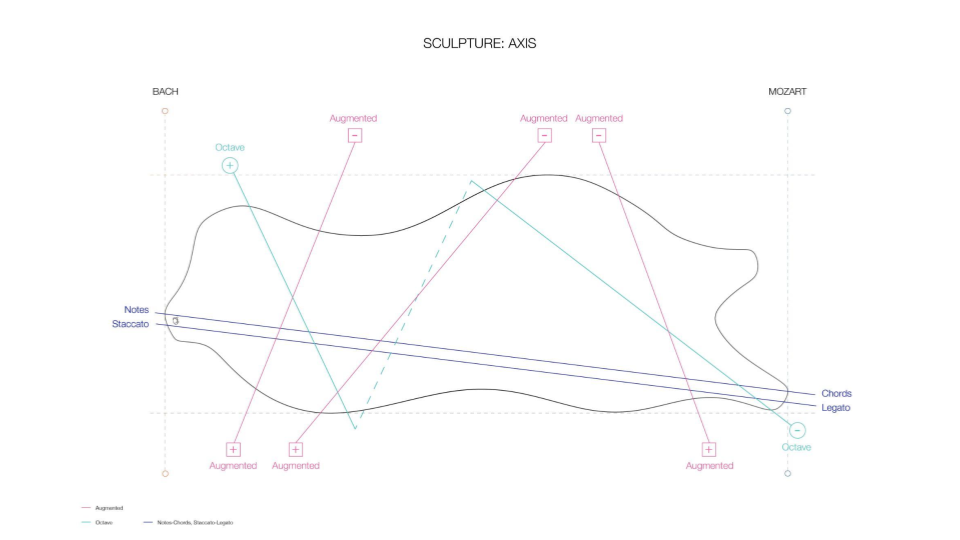

The result was a gradient of how each of these parameters fell within and transitionn between Bach and Mozart as they exist in a semantic space in relation to each other. The way each parameter affected the quality of sound was apparent not only to the ear, but also through changes, either slight or major, that can be observed in the shape of each spectrogram. It is ultimately these differences in shape that the computer actually "sees" and classifies.

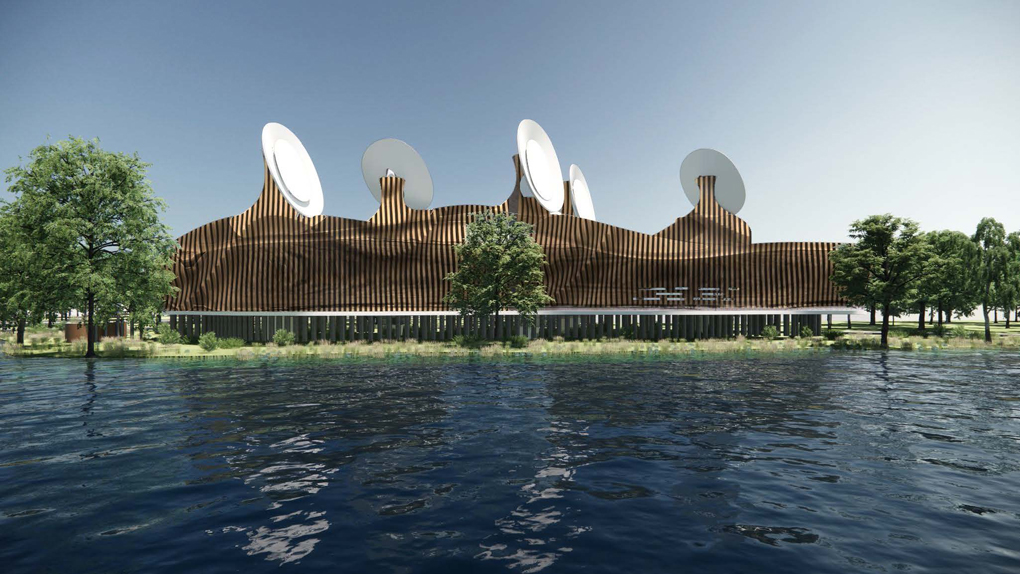

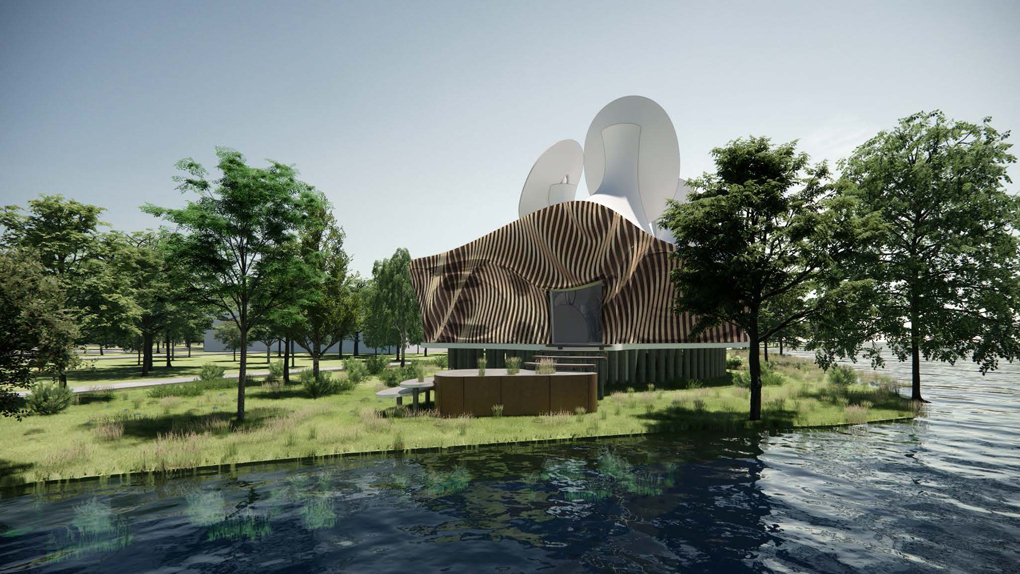

Spatial Logic

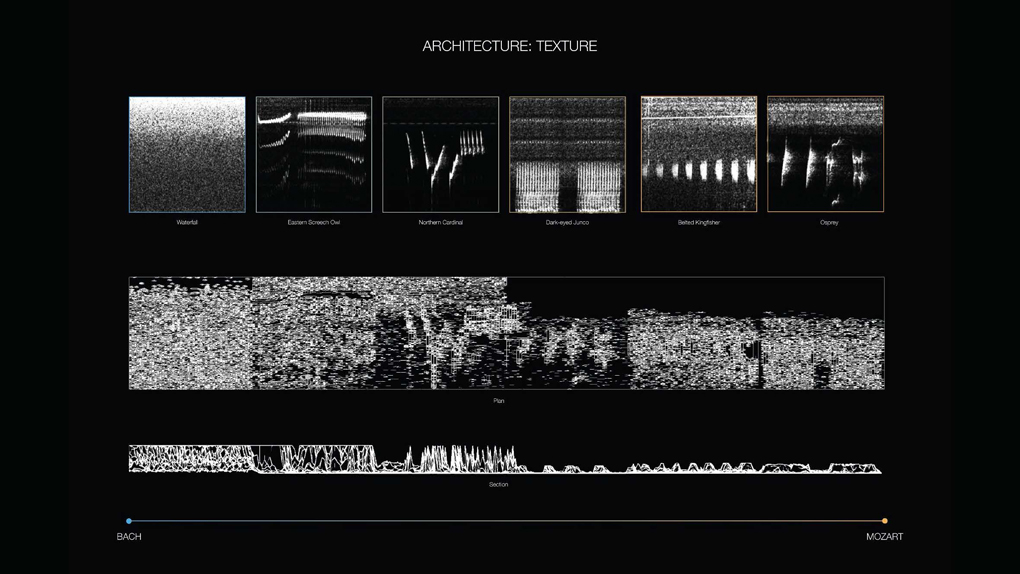

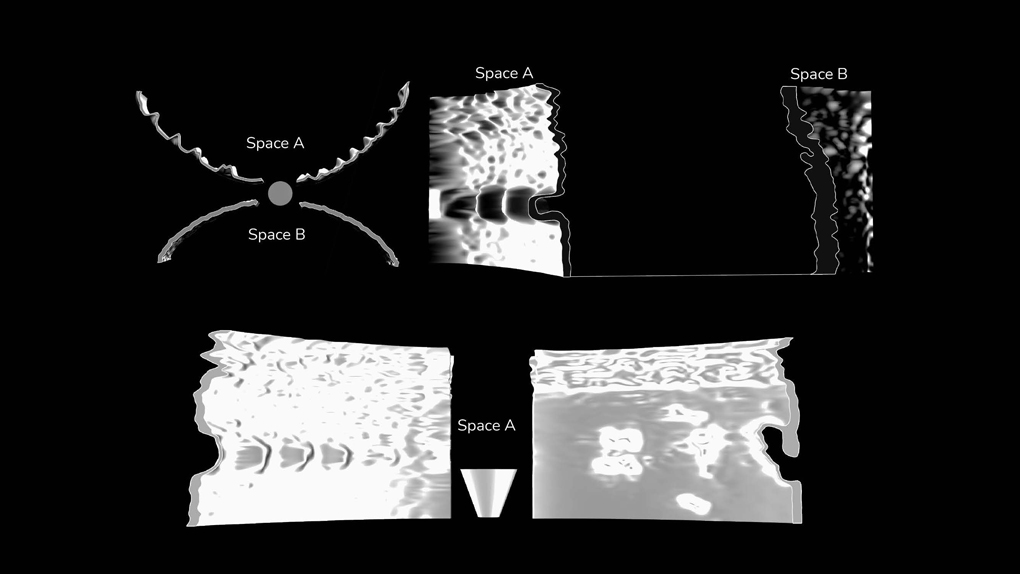

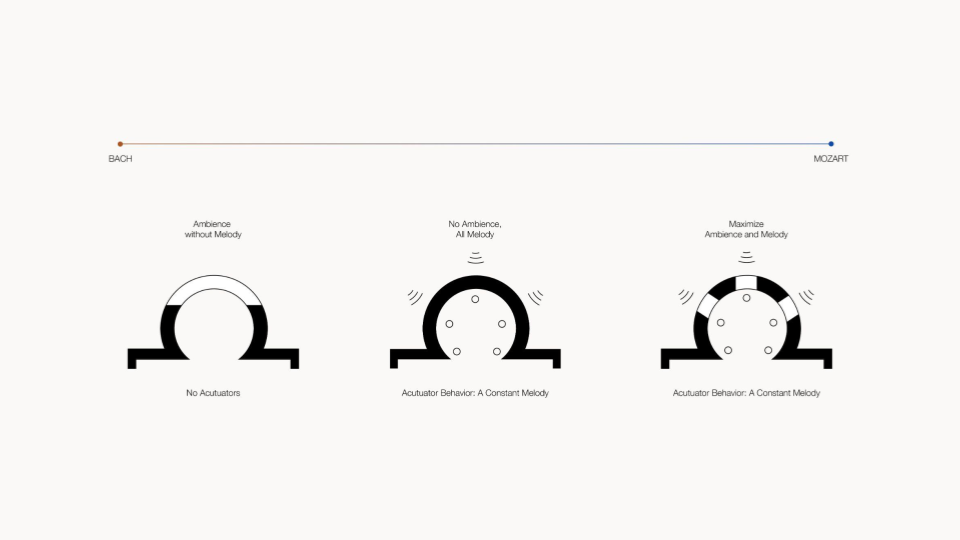

Formal Parameters

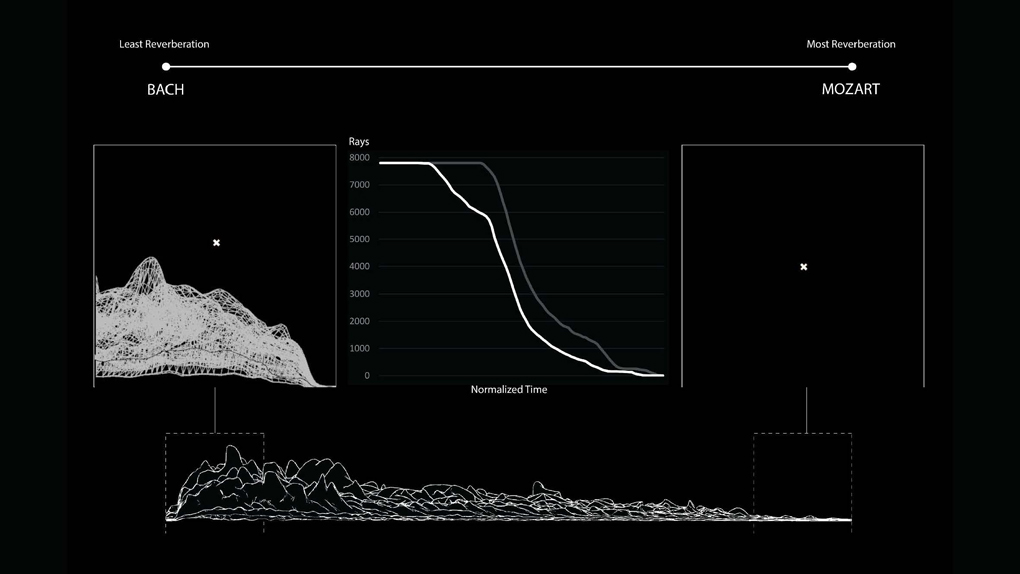

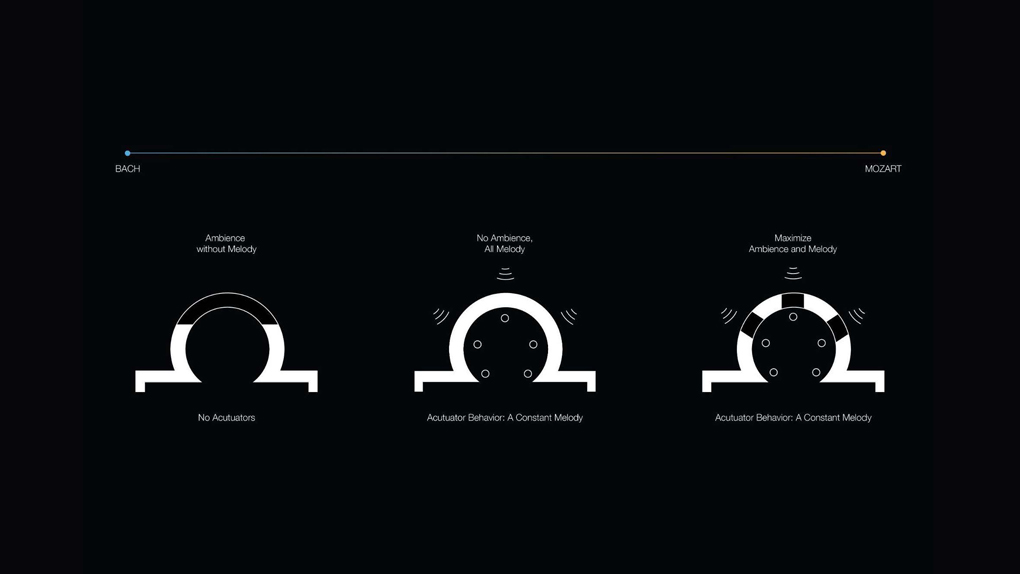

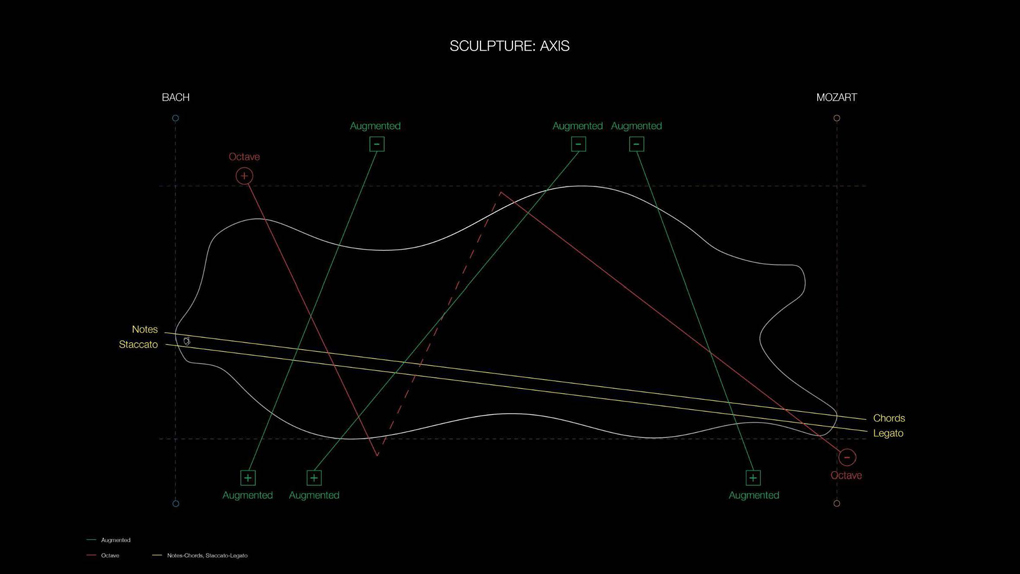

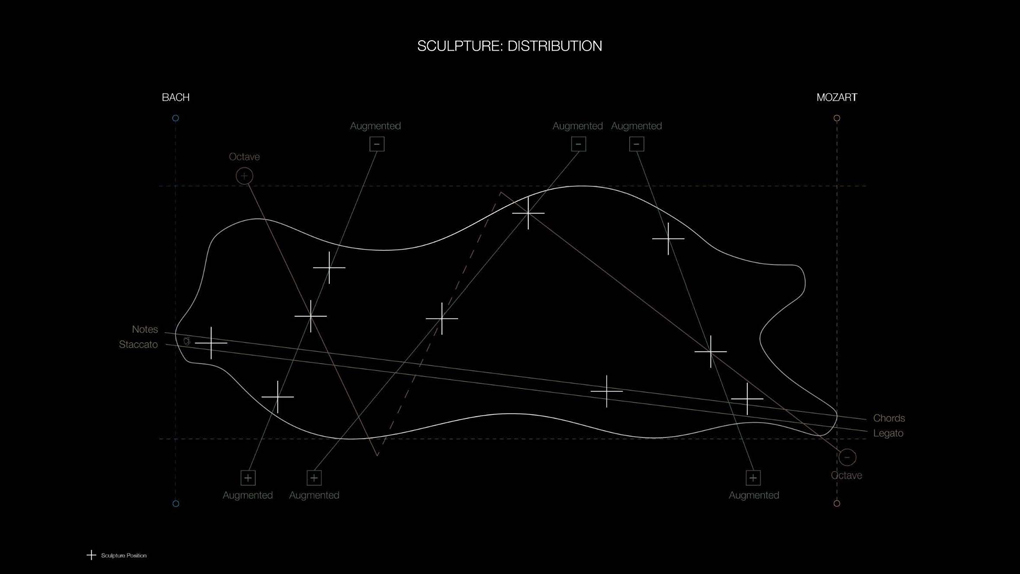

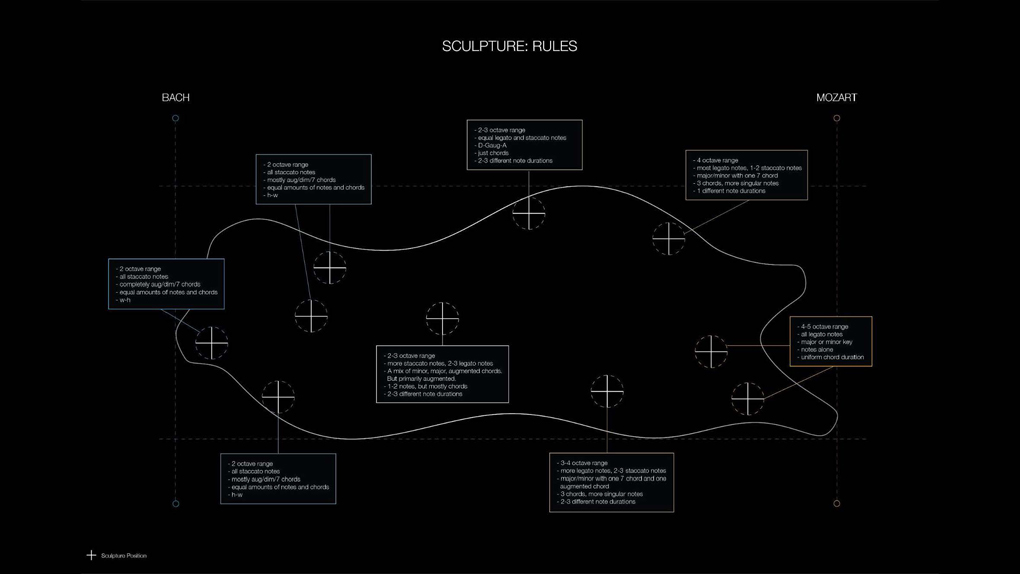

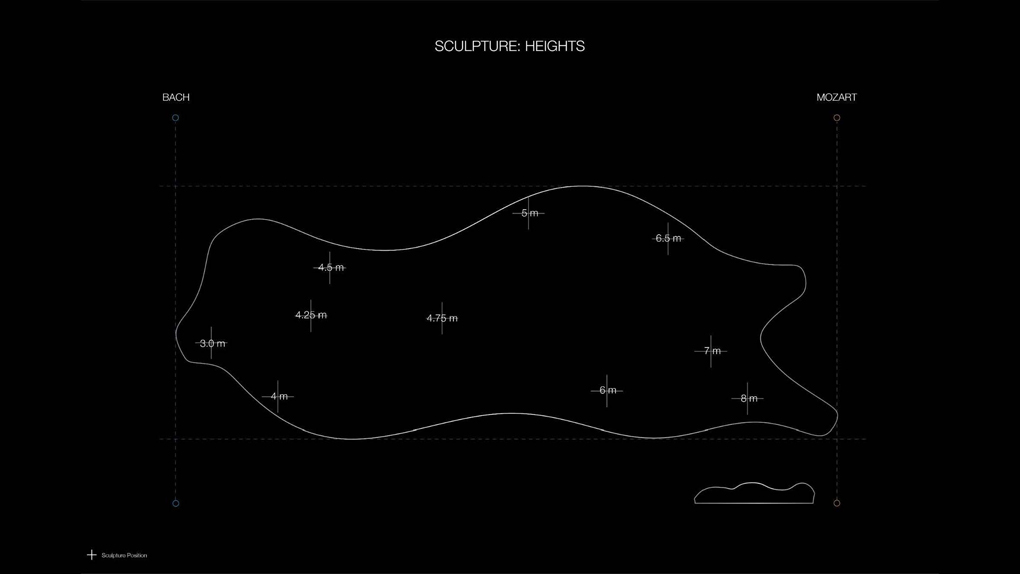

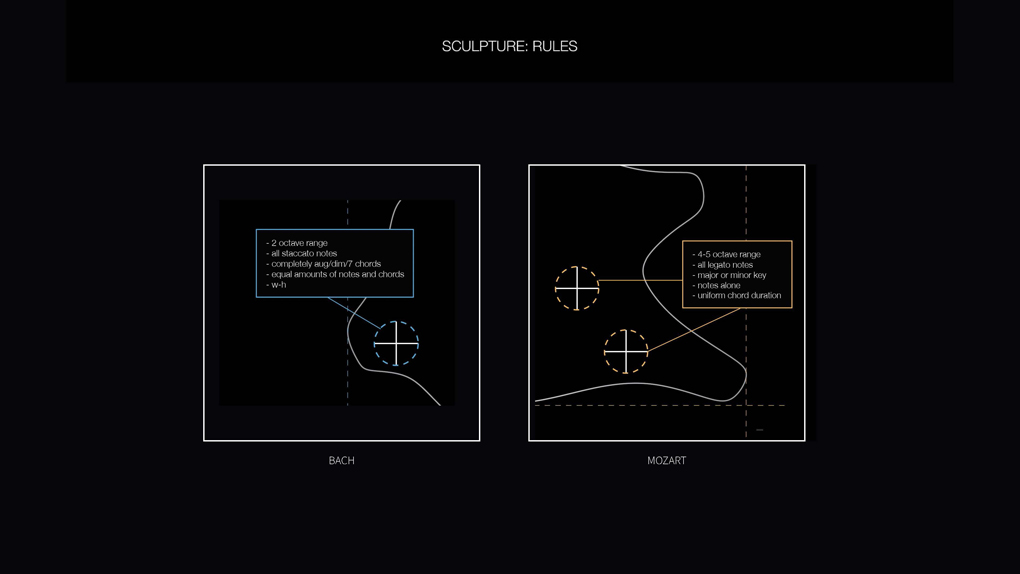

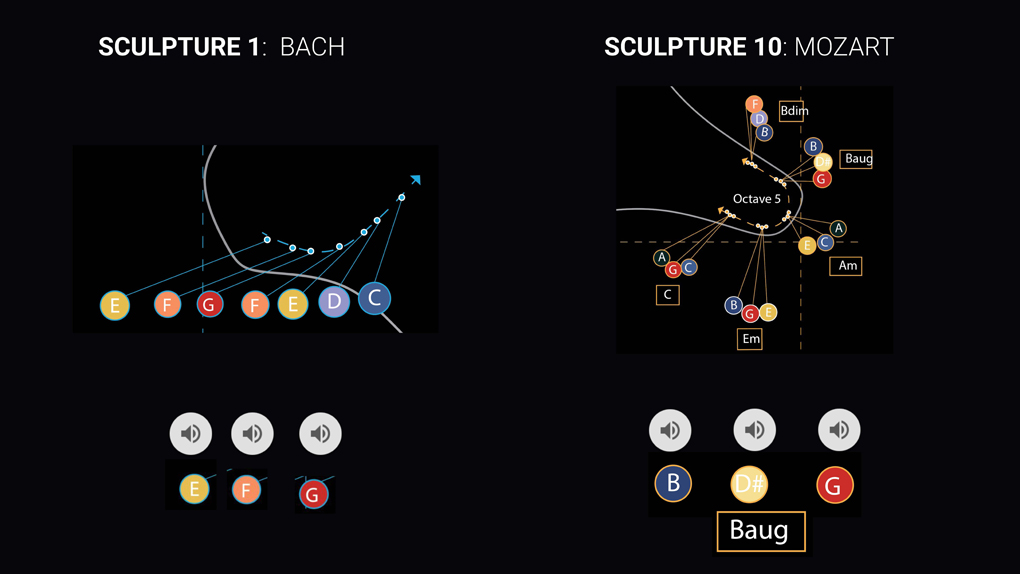

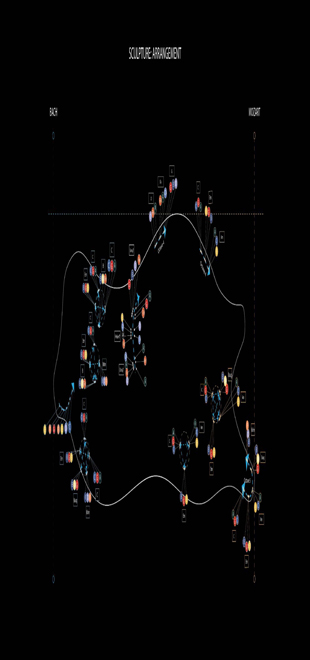

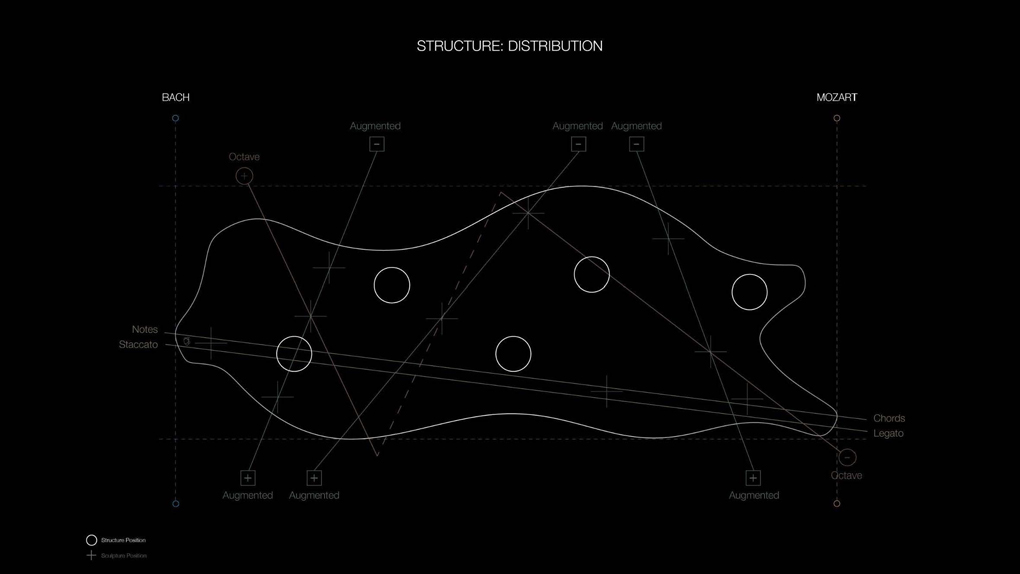

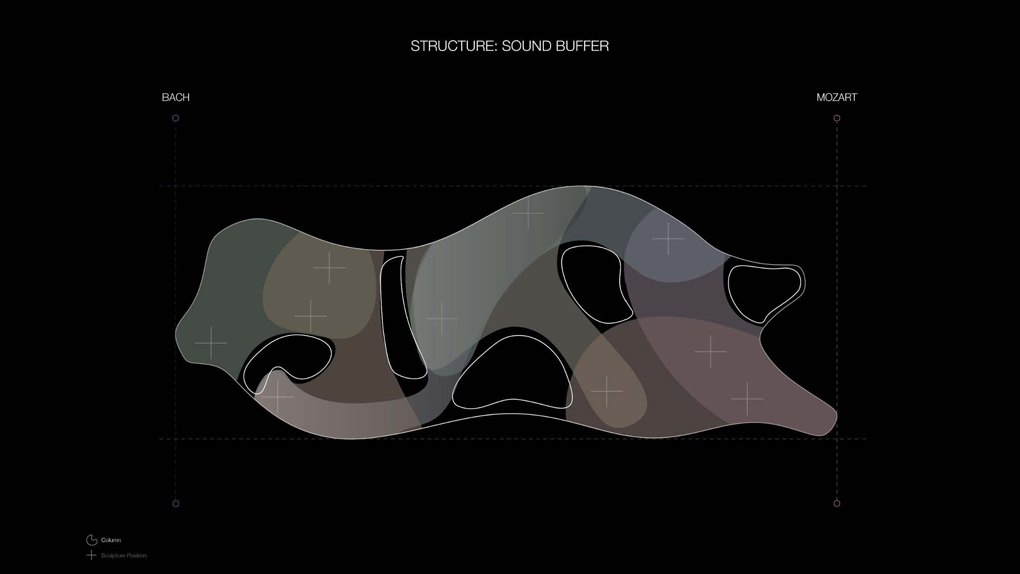

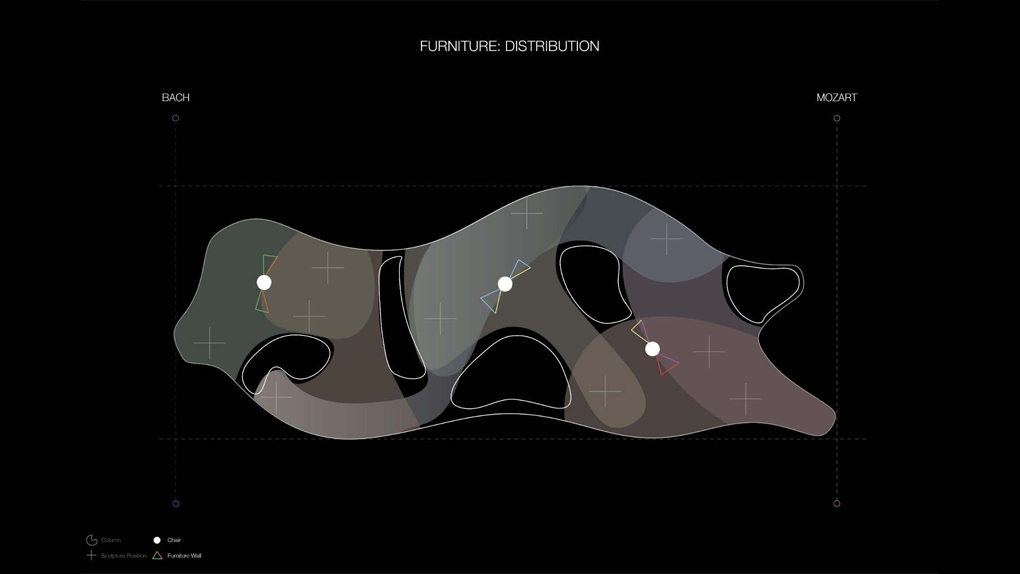

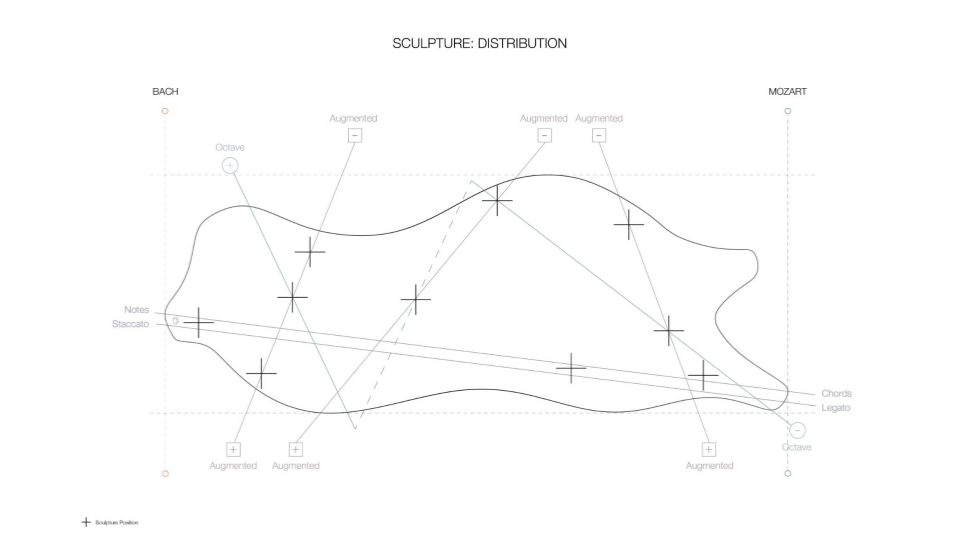

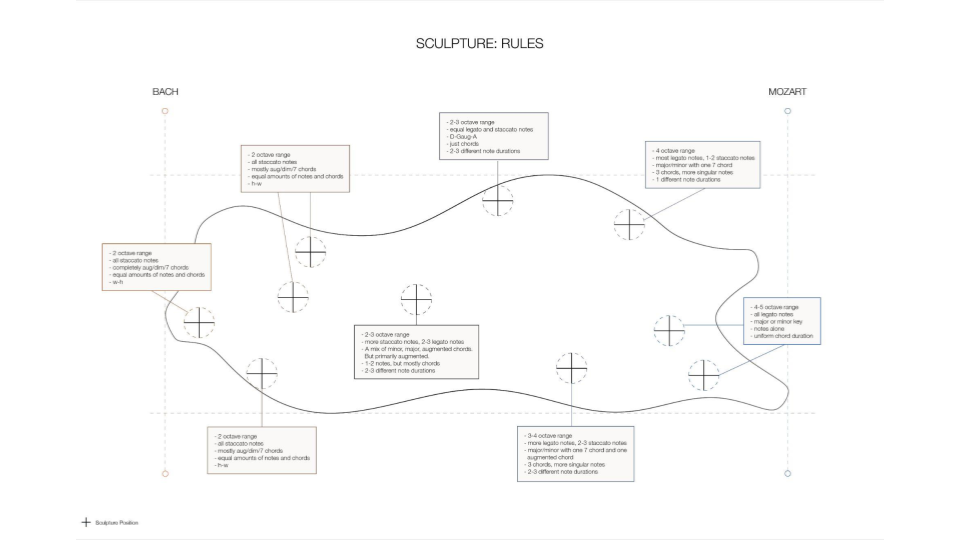

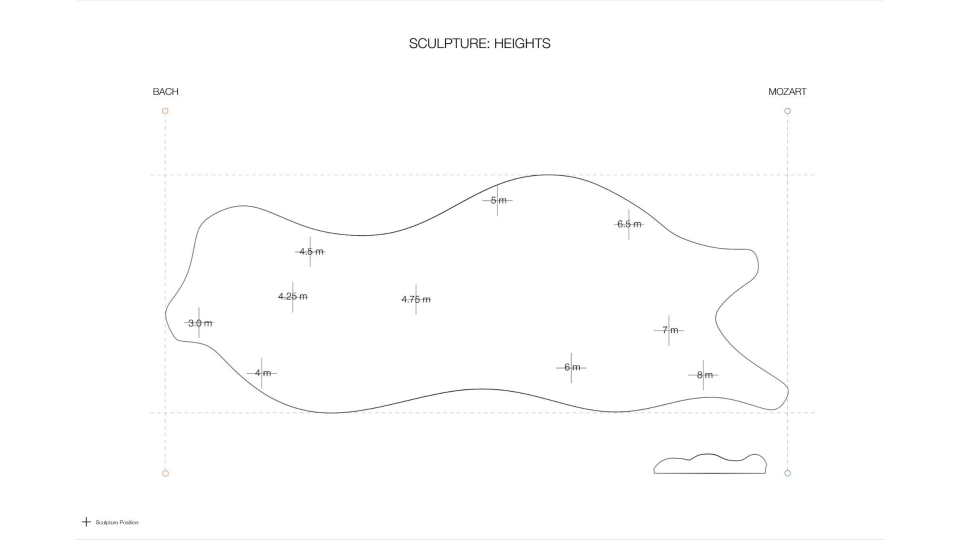

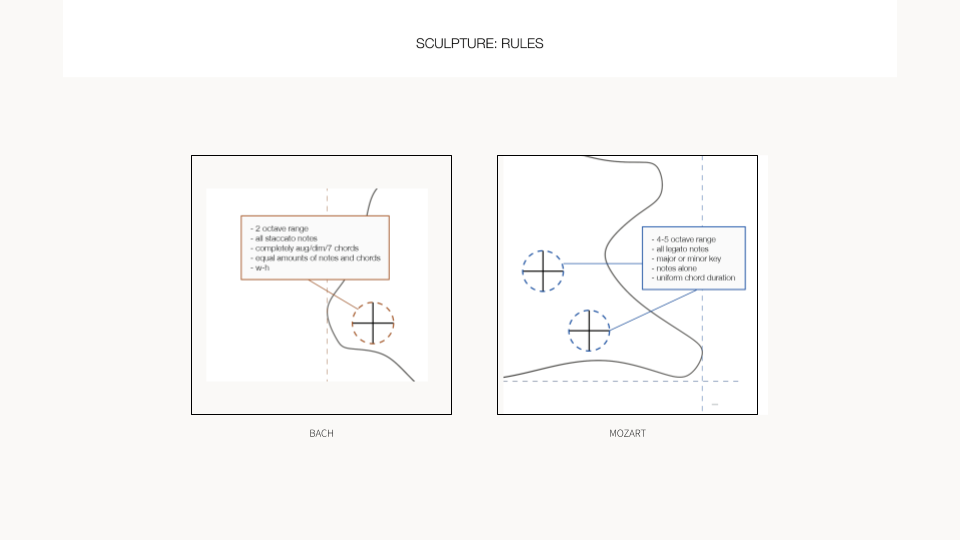

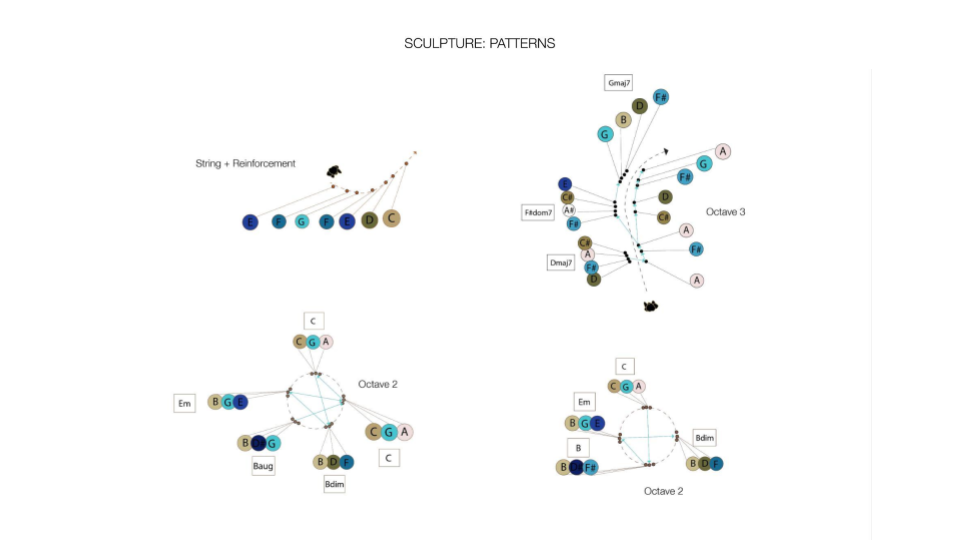

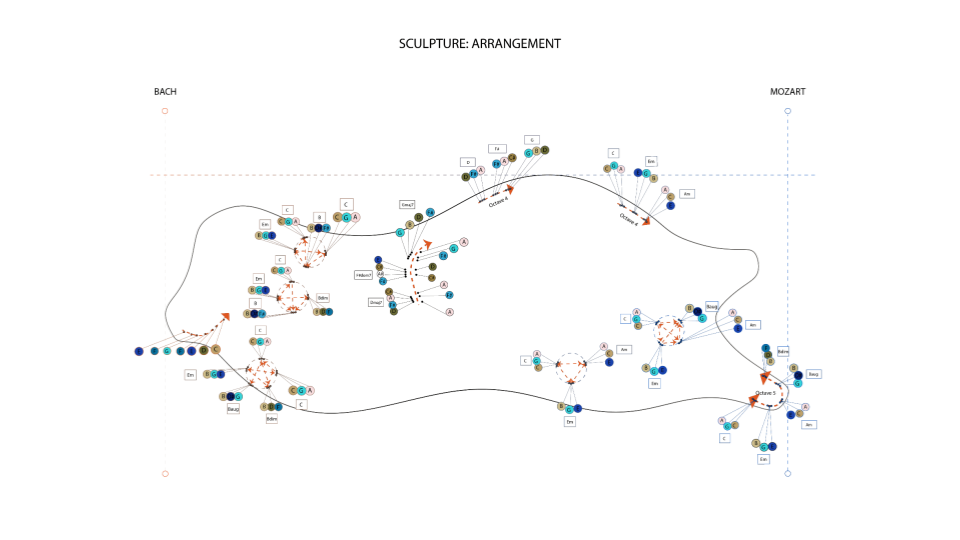

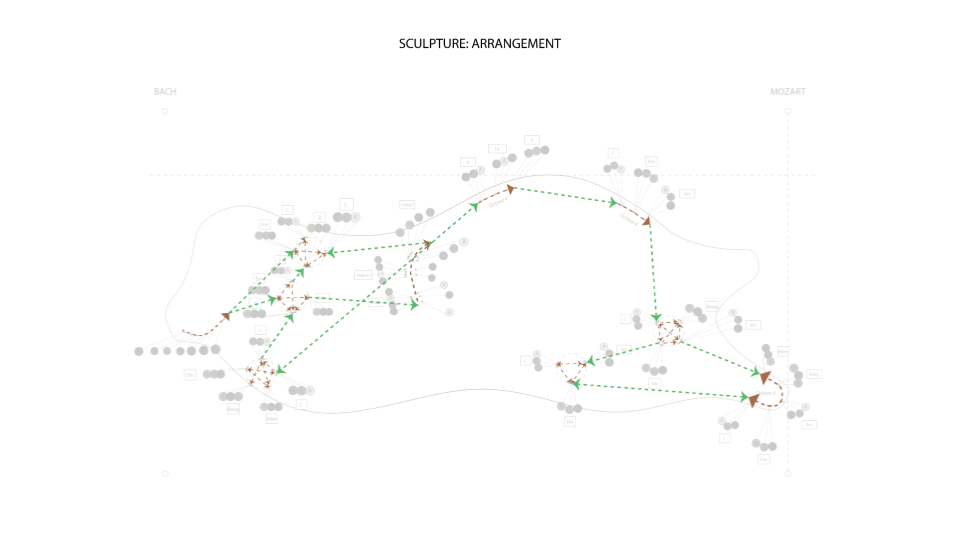

In collaboration with Rohan, I would produce these diagrams which served to translate these sound qualities into actionable design solutions at the scale of a person. Each "sculpture" throughout the exhibit took the form of these room-scale string installations with modulated heights and tuning. Their distributions would be based on the polarities produced through the semantic space between Bach and Mozart.

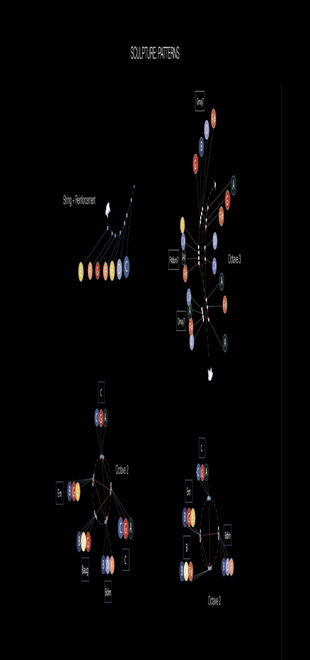

In addition, an actuator would respond to user input and play a series of notes and chords, also based on the polarities we are interested in. Following are the diagrams I created which show specifically how these interactions would take place at the scale of an exhibit.

Sculpture Distribution

Interactive Patterns

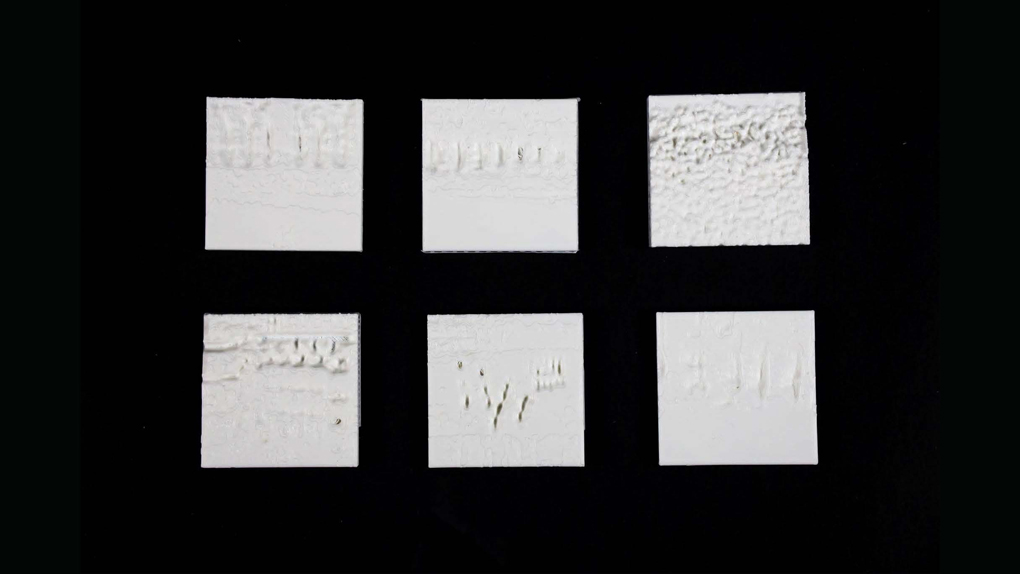

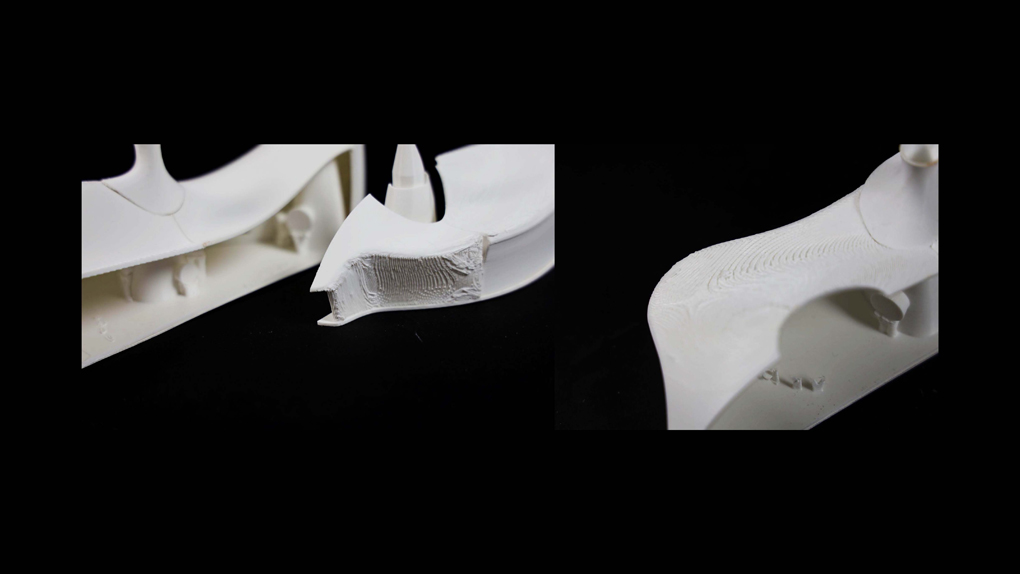

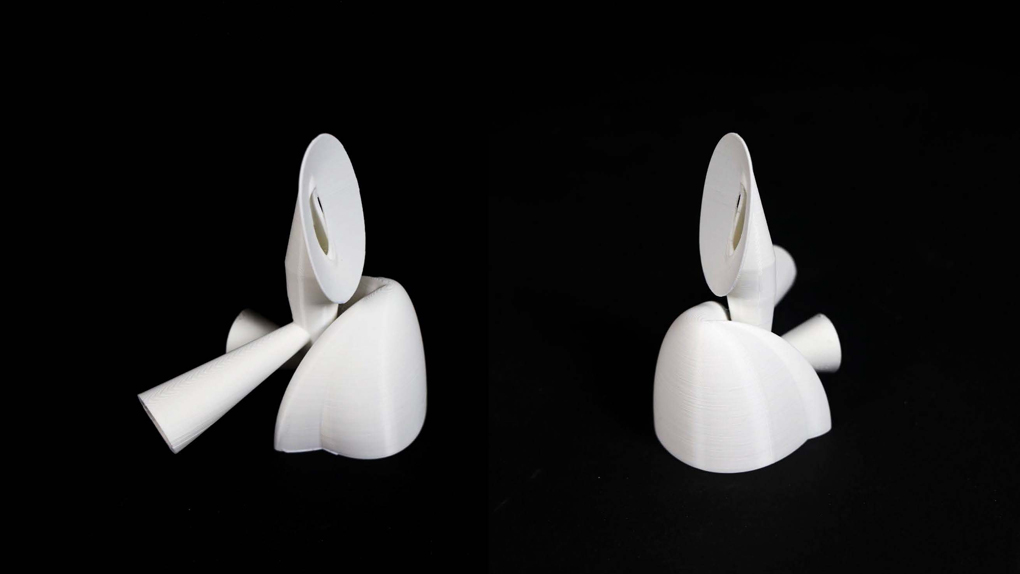

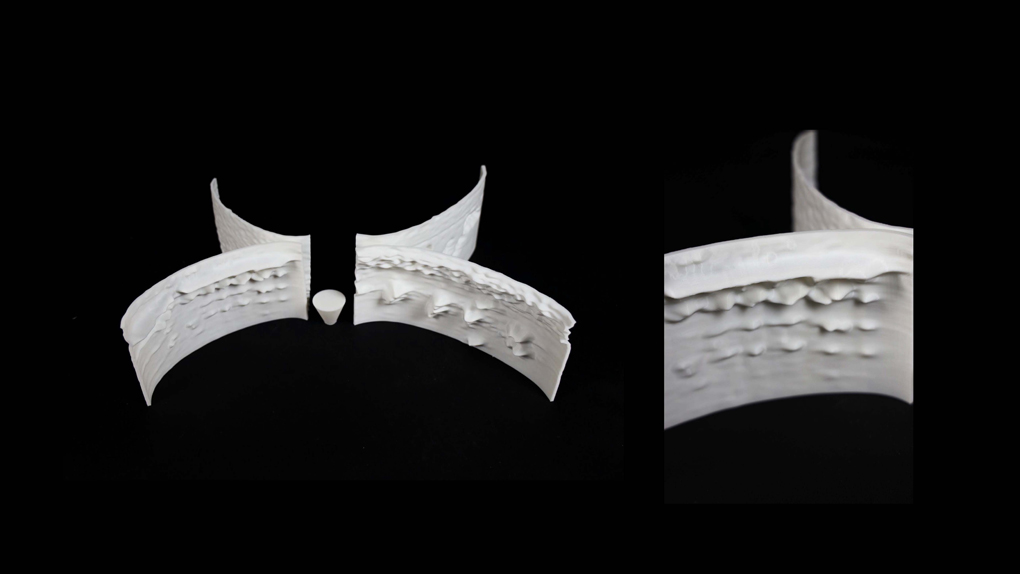

Sculpture Design and Fabrication

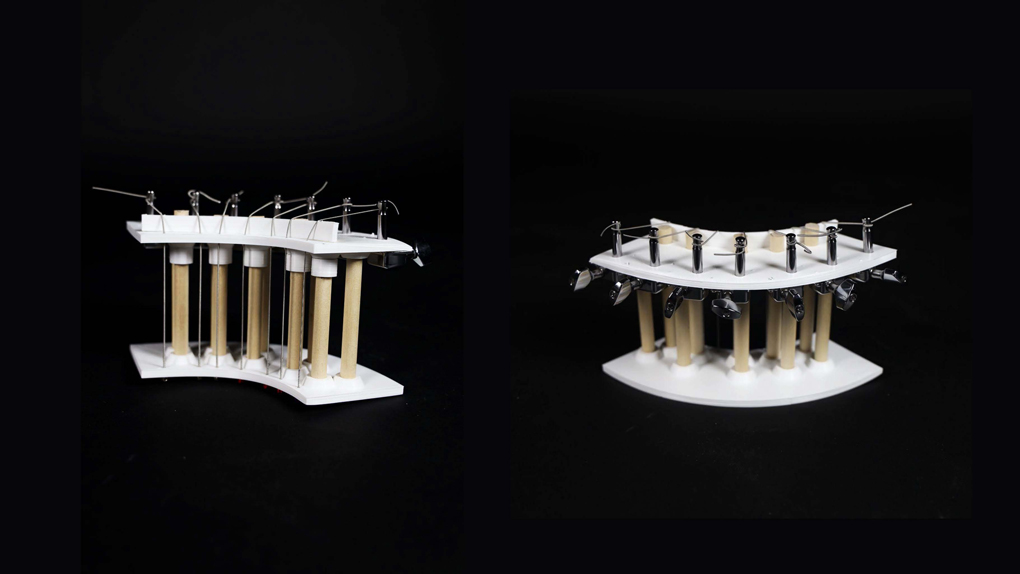

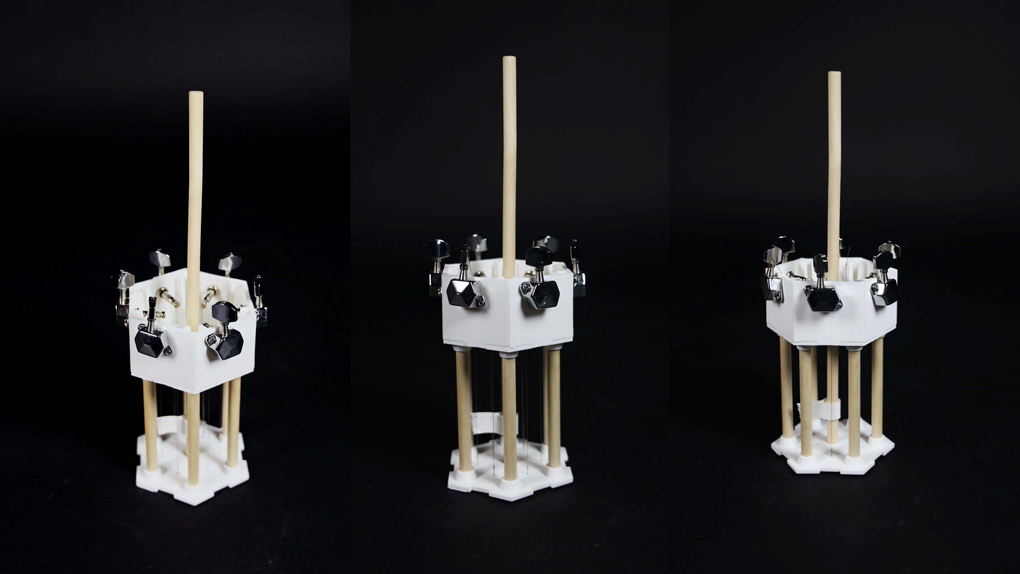

I also created two scale models of each sculpture based on two polar extremes. They are also designed and interactive objects unto themselves, as I tuned and 3D printed them to be instruments that matched the distribution at room-scale.

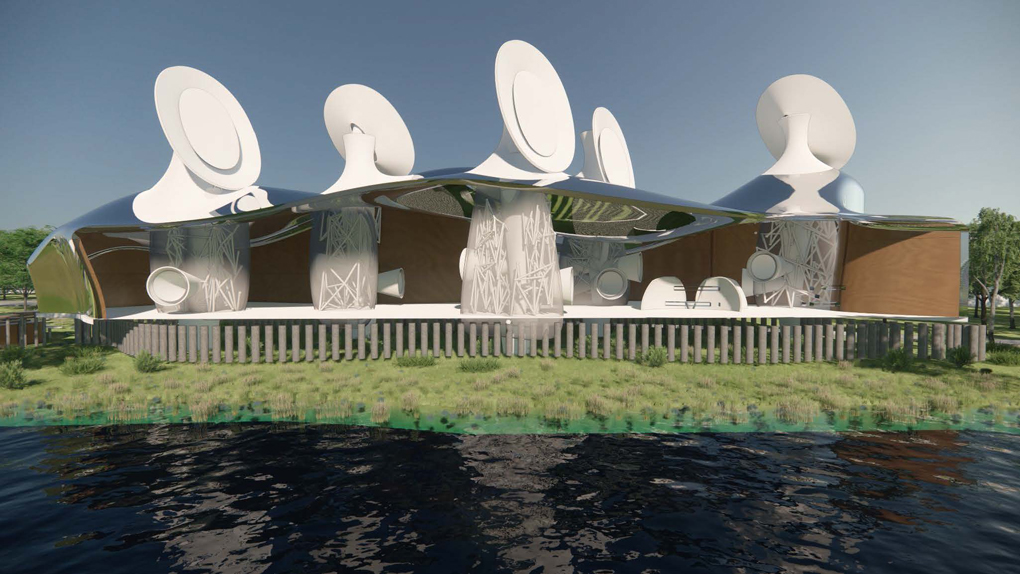

Interactive Exhibit in Unreal Engine

To adequately represent these interactions at room-scale, I also created an Unreal Engine demo which lets the user point and click to travel throughout the exhibit. This allows viewers to see how, as a result of the body and its movement, the limitations of an exhibit as an instruments, and the resulting sound qualities this creates; a user must move back and forth to create input, and the exhibit responds by matching its pace.